In modern electric grids, grid operators must continually balance power demand with power generation to maintain steady grid frequency and ensure overall grid stability. This entails anticipating, in real time, short-term weather-driven changes in both customer load and renewables output to match the remaining “net demand” with dispatchable generation. Grid planners, on the other hand, look far into the future to ensure long-term “resource adequacy” — i.e., that future generation resources will be sufficient to cover demand under all possible weather scenarios and grid conditions.

Satisfying resource adequacy through long-term grid planning processes is a critical component of grid reliability because generation resources and transmission infrastructure can’t be built in the few days ahead of a forecasted heat wave or winter storm.

To study long-term resource adequacy, grid planners:

Resource adequacy studies, in other words, rely on an accurate characterization of future weather variability. In practice, grid planners typically use historical weather as a proxy for future weather — tacitly assuming that a) future weather will be similar to historical weather and b) historical weather is a large enough sample to assess the risk of extreme events.

Unfortunately, both of these assumptions are wrong. Future weather is different from historical weather. Average temperatures, wind speeds, and irradiance are all expected to shift over time from climate change effects, to varying degrees and in different directions depending on what region is being studied. Moreover, the shape of weather distributions will also change. For example, climate modeling shows that extreme cold risks may actually become more frequent in the future as climate change weakens the jet stream — even though average temperatures will rise. That means that the bottom tail of a temperature distribution gets longer while the rest of the distribution shifts right.

Regarding the second assumption — that we have enough historical weather data to assess extremes — the historical record is much smaller than you might think.

First, we can only use serially-complete historical datasets that have values for every hour of the year because we can’t make reliable statistics from partial-year data. This generally excludes airport station temperature data before 1980.

Next, we have to find high-quality weather data to model wind and solar generation. Traditionally, that has meant relying on satellite-based irradiance data (available since 1998) and the NREL WIND Toolkit, which is a high-resolution wind speed dataset calculated for 2007-2013.

As a result, a resource adequacy study that includes correlated temperature-driven load, irradiance-driven solar generation, and wind-driven wind generation would only be able to call upon 7 historical year samples — drastically limiting the view of weather extremes that the grid could face.

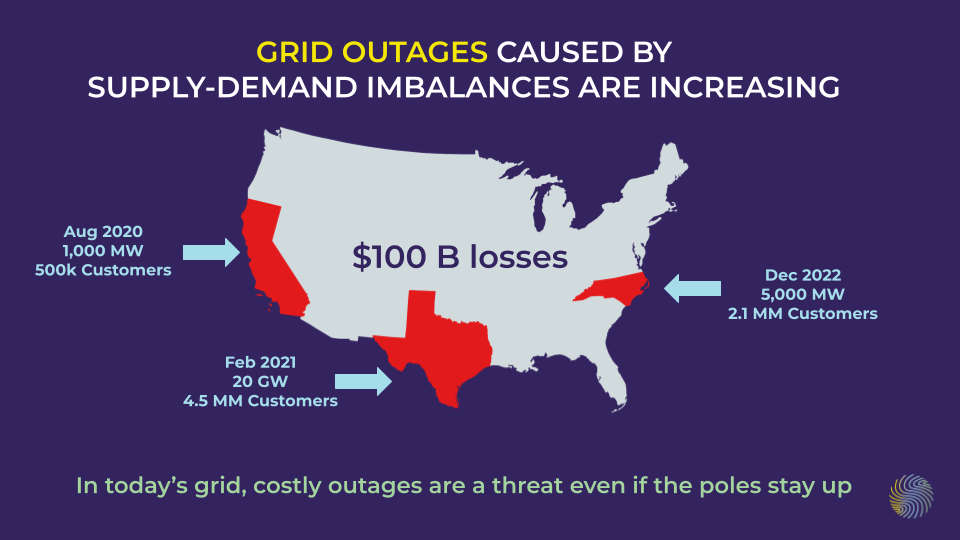

Sadly, flawed grid planning due to insufficient weather assumptions can lead to catastrophe, with major power outages occurring recently in California (2020), Texas (2021), and North Carolina (2022). An NREL post-mortem of all three events found modern electric grids, which increasingly rely on intermittent weather-dependent renewable energy generation, to be increasingly impacted by extreme weather conditions — and that “weather in recent years has exceeded the bounds of anticipated conditions,” highlighting the need for improved planning processes that accurately account for jointly correlated extremes of weather, power generation, and generation outages.

To fill this gap, Sunairio generates a 1,000-path ensemble of future hourly weather to give grid planners robust, complete, and actionable distributions of weather, grid conditions, and asset-level variability. This ensemble is climate-change adjusted, trained on the longest possible series of weather data, and numerous enough to gain intelligence into future extreme events — in whatever form they may come.